In a recent BMJ article, an international team of academics and clinicians have scoured the machine learning literature and spent two years debating the intricacies of developing and deploying machine learning.

I’m generally pretty cautious about such guidelines; not that they aren’t useful, but that they’re so useful that everyone tries to produce one and we end up with several of them, with overlapping recommendations and remits. And of course, because the first one will be the most recited, there’s generally a race to the bottom.

However, I’m cautiously optimistic about this one. I’d recommend anyone interested read the full text, but I’ll provide a summary and some thoughts here.

What they did

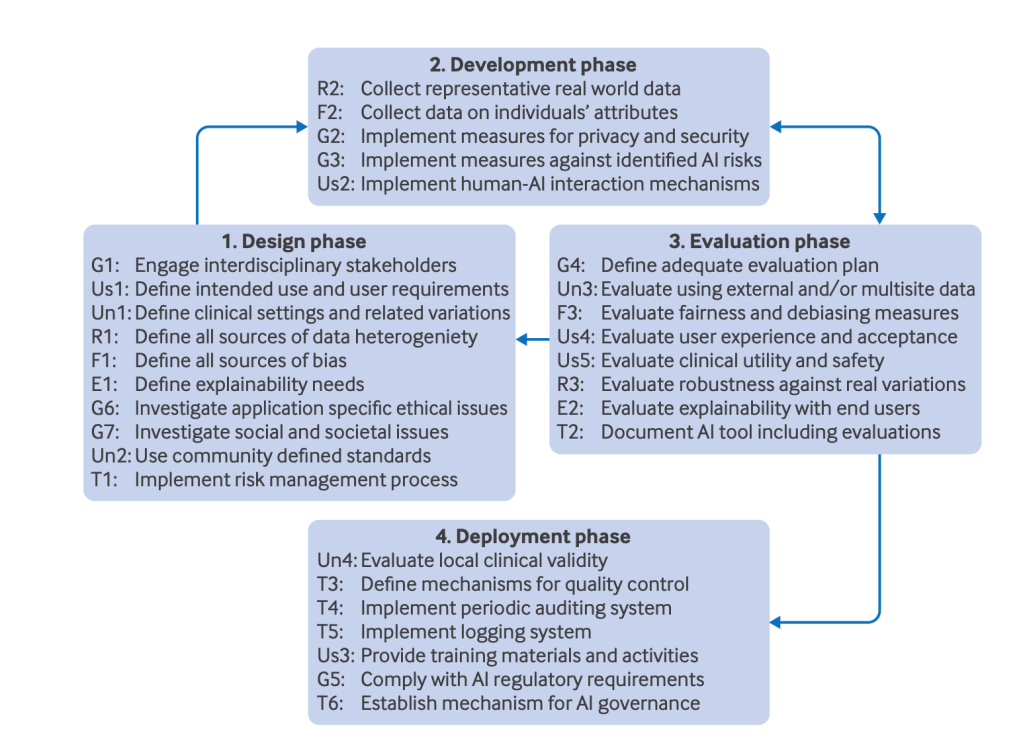

A consortium of 117 inter-disciplinary experts (‘AI’ scientists, clinical researchers, biomedical ethicists, and social scientists) from 50 countries consulted over a two year period to develop guidelines for the development and deployment of AI tools in healthcare. This is different to the suite of publishing guidelines, such as DECIDE-AI, which focus only on best practice for publication (i.e. data sharing and replicability). They come up with 30 recommendations which sit in 6 categories, which I’ll oputline below.

What they found

Fairness

An AI tool should maintain performance across individuals and groups.

There are any number of ways biases can creep into a model, from collecting data with skewed demographics, to feature extraction/selection creating unintended correlations.

They recommend identifying sources, collecting information on individuals (not sure what they recommend when people refuse to give such information, as such individuals are likely to share characteristics and the dataset will be biased against them) and evaluating fairness.

I’d be interested to from hear anyone working on the latter, and this sounds like a hard problem. In banking you’d like to be able to change a person’s race, say, whilst holding everything else constant and see if the tool changes it’s output, hopefully not. But in medicine, race is often used as a proxy measure for genetics in lieu of actual genetic testing, it has predictive power which you’d want to use to help identify disease, so the concept of fairness in medicine might be different to other domains. For instance, you’d want an AI tool to factor in race for a prostate cancer risk tool, rather than ignore it like the banking case.

Universality

A healthcare AI tool should be generalisable outside the controlled environment where it was built

The generalisation gap is well documented in medicine, where a model developed in one context fails to generalise to even a slightly different context. This will be application specific, but in general you’d want your model to be as interoperable and transferable as possible.

This can be mitigated by defining the clinical setting, using any existing standards that exist there, evaluating against a (truly) external dataset and validating it locally before deployment.

Traceability

Medical AI tools should be developed together with mechanisms for documenting and monitoring the complete trajectory of the AI tool, from development and validation to deployment and usage.

This includes risk management, documentation, continuous quality control and post-deployment auditing and updating. For anyone in medical research, these are pretty standard recommendations

Usability

The end users should be able to use an AI tool to achieve a clinical goal efficiently and safely in their real world environment.

This involves defining user requirements (although I’d rather hope healthcare professionals would be embedded in the develop team anyway), defining human_AI interactions, providing training, evaluating usability and clinical utility.

To highlight the importance of this let me point out that in my 12 years of nursing I only ever once received formal training on any of the equipment I ever used in hospital (for a cardiac monitor), including things as important as ventilators. All my training was informal from other doctors and nurses, who had learnt from other nurses and doctors, some of whom might have once received some training on a lunch break and so on until before I was born (some equipment really was older than me). Now this works, but there were a million features on some equipment which just never got used as they got lost in the chain. They got lost because they were useless features, or best optional extras.

Keep it simple, make it intuitive and make sure it’s what actually needed in the clinical environment

Robustness

The ability of a medical AI tool to maintain its performance and accuracy under expected or unexpected variations in the input data.

Not sure about this one. It seems to overlap with some of the other categories. It includes defining sources of data variations, training with representative data, and evaluating robustness.

Let’s say an earthquake hits just as a CT head is being done – not enough to bring masonry down, but enough to give the unit a good shake. I wouldn’t expect a classifier to be robust to this unexpected variation, but I would expect it not to attempt classification under such conditions.

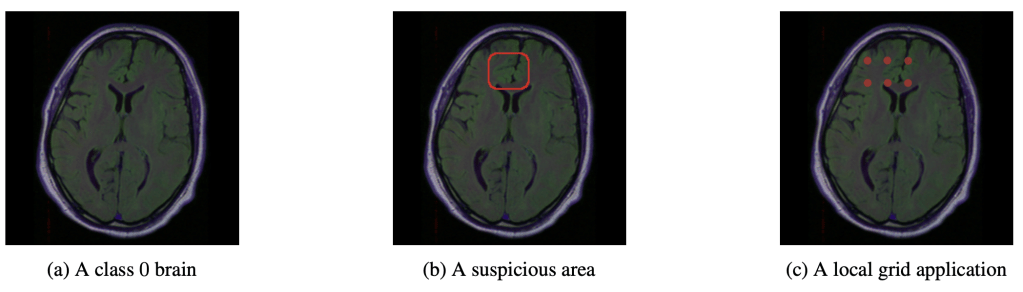

Explainability

Medical AI tools should provide clinically meaningful information about the logic behind the AI decisions.

My favourite as this is the domain I’m working in right now. It includes simply establishing whether explainability is needed (as I discussed briefly before, not everything in medicine needs to be explained to be useful), and if it is provided, the explanations themselves should be evaluated. Thorogh evaluations are currently missing, to the point some recommendations (such as DECIDE-AI) don’t advise using explanability – it’s currently just too unreliable. I’d largely agree with that sentiment, but I’d say it’s only a temporary state while more general methods are refined for medical uses.

General recommendations

They also include some general guidelines, but I’ll let you read those for yourself, but below captures them with everything mentioned above.

Quick thoughts

Hopefully this isn’t just the first of a slew of recommendations, but that this one really takes hold. It might not be perfect, but it is a dynamic process, as it must be for a rapidly developing field. You can join their network at their website, https://future-ai.eu/, and hopefully get some say in how this unfolds in the future.

Personally, I would have like to know how these these guidelines interact with various AI legislation around the world. For instance, does adhering to these guidelines guarantee conforming to the EU AI Act. And what about best publishing practices. If each strata isn’t a subset of the one above then we’re simply compounding paperwork and making compliance less likely

Leave a comment